I’ve been adding thoughts to this blog since 2005. I come here to get my ideas sorted out and to give me both something to link to for those ideas as well as to give me a sense of where my thinking was at a given point in time. The last six months have been AI all the time for me, and I’ve been in some excellent conversations around the topic. Some of these suggestions are the same ones I would have given two years ago, and some even 20 years ago, but they all keep coming up one way or another.

I turned this into a list because I just couldn’t come up with a theme for them. 😛

Discipline specific AI inspired learning goals

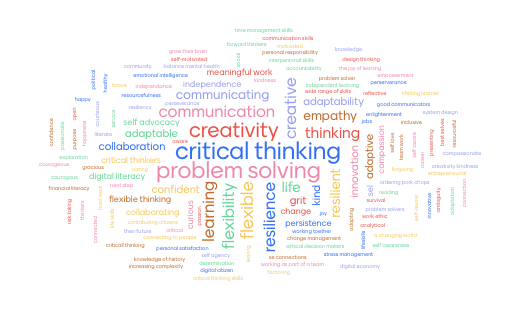

This one is starting to float to the top every time I talk about AI. One of the challenges of talking about digital strategy for a whole university is that different fields are often impacted in ways that the same advice can’t be given across different disciplines.

I’m feeling more comfortable with this suggestion. We need to be adding learning objectives/goals/whatever-you-call-thems at both the program and maybe even course level. The details of them will be a little different by discipline and even by parts of disciplines, but broadly speaking they would include something like

- How to write good prompts for black box search systems (ChatGPT/google) that return useful/ethical/accurate results in your discipline

- Choosing appropriate digital locations/strategies for asking questions

- Strategies for verifying/improving/cross-referencing results from these systems

- How is AI used by professionals in your discipline (good and bad)

You could say ‘yeah, dave, that digital literacy, we’ve been doing it (not doing it) for almost a generation now.’ I agree with you, but I think it’s becoming increasingly important. Search results have been (in my non-scientific anecdotal discussions) getting less useful and these GPT based systems are approaching ubiquity. Students are going to understand the subtlety of how it works in our profession. Many of us wont know either.

Teach/model Humility

This one’s easy. Say “I don’t know” a lot. Particularly if you’re in a secure work position (this can always be tricky with contingent faculty). Encourage your students to say ‘I don’t know’ and then teach them to try and verify things – sometimes to no avail. It takes practice, but it starts to feel really good after a while. There is NO WAY for anyone to know everything. The more we give in to that and teach people what to do when they don’t know, the better things are going to be.

When we get a results from an AI chatbot, we need to know if we are the wrong person to analyze it. We might need to get help.

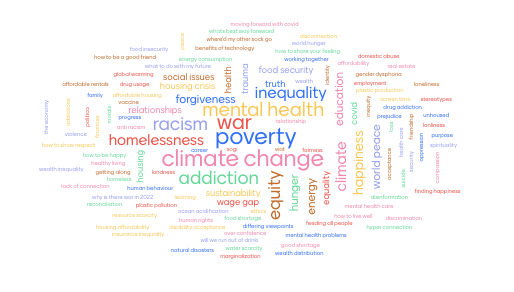

Spend time thinking about the WHY of your old assessments

Had an awesome conversation with a colleague a few days ago talking about the how and why of teaching people facts before we expect those people to do things with those facts. This keeps coming up.

- We teach facts so that they can have them loosely at their fingertips when they are trying to do more complex things.

- We often create assessments so that students will have the scaffolding required to do what is, often, boring memorizing. We know they’ll be happy (or at least more competent) later, so we ‘offer to give them grades’ or ‘threaten to take grades away from them’ if they don’t memorize those things.

- Those assessments are now often/mostly completed by students using AI systems in ways that no longer require students to memorize things.

If we need students to have things in their heads, or do critical thinking or whatever, we need to clearly understand what we want AND explain the reasoning to students. At this point, the encouragement/threats that have been our assessments are just not going to be that effective. Does an essay done with GPT4 still teach critical thinking or encourage close reading of texts? Does a summary shoved in an AI system and copy/pasted into a text box help anyone?

Spend even more time thinking about your new AI infused assessments

Lots of interesting conversations recently about how to incorporate AI into activities that students are going to be doing. First and foremost, make sure you read some of the excellent articles out there about the ethical implications of making this decision. We have tons of deep, dark knowledge about the ethical implications of writing the way we’ve been doing for a few thousand years. If we’re going to take a step into this new space, we need to take time to think about the implications. This Mastodon post by Timnit Gebru is a good example of a consideration that just doesn’t exist before AI. AI not only produces problematic texts, the more it produces problematic texts the more problematic texts there are to influence the AI. It’s a vicious cycle.

https://dair-community.social/@timnitGebru/110328180482499454/embed

No really. There are some very serious societal/racial/gender/so-many-other implications to these tools.

Talk to your students about AI, a lot

This is not one of those things you can’t just kind of ignore and hope will go away. These AI tools are everywhere. Figure out what your position is (for this term) and include it in your syllabus. Bring it up to your students when you assign work for them to do. Talk to them about why they might/might not want to use it for a given assignment.

Try and care about student data

I know this one is hard. Everyone, two months ahead of a course they are going to teach, is going to say “oh yes, I care about what happens to my student’s data”. Then they see something cool, or they want to use a tracking tool to ensure the validity of their testing instrument, and it all goes out the window. No one is expecting that you understand the deep, dark realities of what happens to data on the web. My default is “if I don’t know what’s happening to student data, I don’t do the thing”. Find someone at your institution who cares about this issue. They are, most likely, really excited to help you with this.

You don’t want your stuff given away to random corporations, whether it be your personal information or your professional work, make sure that you aren’t doing it to someone else.

Teach less

In my last blog post, I wrote about how to adapt a syllabus and change some pedagogical approaches given all this AI business. The idea from it that I’ll carry forward to this one is teach less. If there’s anything in the ‘content’ that you teach that isn’t absolutely necessary, get rid of it. The more stuff you have for students to remember or to do the more they are going to get tempted by finding new ways to complete the work. More importantly, people can get content anywhere, the more time you spend demonstrating your expertise and getting into meaningful content/discussions that take a long time to evolve, the more we are providing them with an experience they can’t get on Youtube.

Be patient with everyone’s perspective

People are coming at this issue from all sides right now. I’ve seen students who are furious that other students are getting better marks by cheating. I’ve seen faculty who feel betrayed by their students. People focusing on trust. Others on surveillance. The more time we take to explore each other’s values on this issue, the better we’re all going to be.

Take this issue of a teacher putting wrong answers online to trap students. He really seems to think he’s doing his job. I disagree with him, but calling him an asshole is not really going to change his mind.

Engage with your broader discipline on how AI is being used outside of the academy

This is going to be different everywhere you go, but some fields are likely to change overnight. What might have been true for how something worked in your field in the summer of 2022 could be totally different in 2023. Find the conversations in your field and join in.

Focus on trust

Trying to trap your students, to track them or watch them seems, at the very least, a bad use of your time. It also kind of feels like a scary vision of an authoritarian state. My recommendation is the err on the side of trust. You’re going to be wrong some of the time, but being wrong and trusting feels like putting better things into the world. Building trust with your students ‘can’ lead to them having a more productive, more enjoyable experience.

- Explain to them why you are asking them to do the work you are giving them

- Explain what kind of learning you are hoping for

- Explain how it all fits together

- Approach transgressions of the social contract (say a student used AI when you both agreed they wouldn’t) as an opportunity to explain why they shouldn’t.

- Focus on care.

As teachers/faculty we have piles of power, already, over students. Yes, it might be slightly less power than we had 50 years ago, but I’m going to go ahead and suggest that it might be a good thing.

11 of 10 – Be aware of where the work is being done

I recognize that full time, tenured faculty are busy, but their situation is very different than a sessional faculty member trying to rewrite a course. Making these adaptations is a lot of work. Much of that work is going to be unpaid. That’s a problem. Also, for that matter, ‘authentic assessment’ is way more work.

Same for students. If you are changing your course, don’t just make it harder/more work.

Final thoughts

I’m wary to post this, because as soon as I do I’ll think of something else. As soon as I wrote that, I thought of number 11. I’ll just keep adding them as they come to mind.

Times are weird. Take care of yourselves.