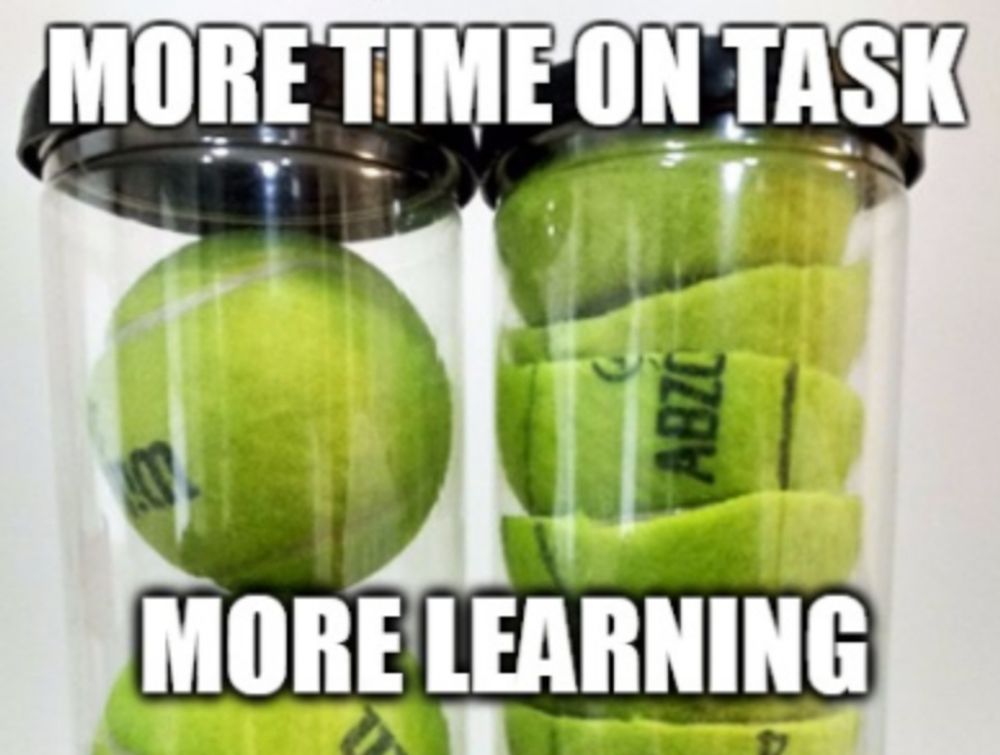

I was cleaning up some old image files on my computer over the weekend and came up on this meme I tried to make about ten years ago. I don’t think I quite hit the language right, but, basically, I was trying to say that the more we try to make learning more ‘efficient’ the more we break the thing we were originally trying to do. I think of learning as a complex, deeply uncertain process, that’s different (to some degree) for every student and (i think critically) different for every teacher.

As I was not able to quite make this joke, I will now ruin it by trying to explain it 🙂

Why this is a problem?

I think we can agree, at least, that we don’t all agree on what learning IS.

That’s troubling when it so happens that helping people learn is what you do for a living. One of the most important places we see this, I think, is when we look at the results of research in education. As I am wont to teach in my BEd classrooms, you can find research in education that will support any position. You can find hundreds of articles that say Learning Styles are ‘true’ and hundreds that claim the opposite. You can hear that direct instruction is better or that project based learning is better… SCIENTIFICALLY. It’s all ‘better learning’.

So I teach my student that they need to decide what they think things like ‘learning’ or ‘better’ actually mean before they can choose the research that they need to help them make decisions. If I they see a piece of research that says “this approach leads to better learning outcomes” does that mean that the student has done better on a memory test? Are they ‘happier’? Did they claim on a multiple choice survey that it made them ‘more engaged’?

‘Better’ is too often a synonym for ‘got higher grades’ or ‘remembered’ or ‘was more efficient’. I understand that there are people who do see remembers faster as better learning, but it’s not everyone. I’m not opposed to people remembering things or efficiency… i just don’t think it’s the most important thing. I value engagement over memory.

Certainty turns learning into a task

If we tell students what to learn, the best students just go ahead and do what they’re told. And that sounds great, right? As Beth McMurtry writes in the Chronicle this week, it has some unfortunate side effects. If my job is to sit in a classroom, peruse a VERY specific rubric and hit all the targets on it, then doing what I’m told is what is going to make me successful. I’m learning that learning is something that I simply receive. No passion. No interest. Just the contractual obligation to give the answer expected.

There all kinds of challenges with this, from bored faces in class, resistant students and the inevitable “just tell me what you want me to put in the assignment and I’ll do it” demand from students. Student become customers. The classroom becomes transactional.

Maybe the biggest concern for me is a lack of belief in nuance. If you think that someone else has the answers to the questions, you both start to think that you are dumb because you never know the answer AND, maybe, you start to look for people who are convinced they have the answer. (and I don’t mean, “this is the scientific consensus on this issue given this and this research”, which is totally fine, but people who argue that “this is true!”.) People gravitate to the biggest megaphone.

GenAI replaces tasks that are algorithmic

And this is why GenAI is so powerful right now. These tools can produce text that appears to speak meaningfully about how much a student cares about their community. It can find the answer to your physics problem. If there’s a step by step rubric or a specific generally agreed upon answer that you’re expecting from your students, it’s going to do it. It is, in effect, very good at producing those cut up pieces of tennis ball. (to be fair, it’s a specific problem for creative writing as well, which is can often mimic reasonably well)

What it isn’t going to do is what my bookkeeper does for me every six months or so when we get together to talk about my taxes. She knows me as a person and helps me organize things so that they work for me. Turns out people think that her job is going to get taken away by GenAI. From her perspective, the organizing of numbers is such a small part of what she does that it’s not really going to affect her bottom line. She uses GenAI, but mostly to train her staff to write professional emails. Her job is full of nuances, full of dealing with people and decision making – all tasks she isn’t going to turn over to an algorithm.

The more I think about it, the more GenAI is a clarion call telling us we’ve been giving away the farm for years. We all seem to think that other people’s professions are at risk using GenAI, but not our own. Being a professional is, in most cases, knowing how to weigh multiple, often conflicting values to make a decision in an uncertain situation. It’s about judgement. A professional answer the question ‘what should we do?’ not ‘what is true?’

We have lots of pedagogies that help us learn in ‘shoulds’. That are built for judgement and to help us create a tolerance for ambiguity. The answer to the question ‘what do we do about GenAI’ is to first ask our self what ‘better’ means when it comes to learning, and build with that in mind. If better is ‘finish my word problem faster’ then that will send us down one path. If it means ‘students love learning and want to keep doing it’ then we go down another path entirely.

We mostly get to pick this. But we need to think about what we value first.

eg… (this was originally part of the post, but i chopped it out and added it here at the end in case any wanted to read it.)

Take a look at the way that we interpret program level goals. If a capstone goal for a k12 system is something like ‘is a good citizen’ we’ve obviously got a wide range of ways of judging what that could mean. If a group of educational developers is charged with creating a way of ‘measuring’ that there are some things that can work and others that are really hard. Something like ’40 hours of community service’ might be a measurement that people feel like they can do… hours being measurable. But something like ‘has an interest in their community’ might be a little harder. In practice both of these create real challenges.

The forty hours, while certainly measurable, are really difficult to do in practice. If there are 500 kids at the local high school, getting all 500 of them authentic community service experience is a bit of a nightmare. It turns out that kids from families that are already involved in the community can do it really easily, those kids who don’t have that privilege struggle – so those kids get whatever they can manage. Often a token experience (Several students from a university I worked with complained privately about the ‘shoe sorting job’ that several of them got a local shelter… it took about 30 minutes of the eight hours they were there, but the shelter people had nothing else they had for them to do. This job, they told me, happened every year).

The ‘interest in the community’ creates a different type of challenge. Let’s say you decide that you are going to get the student to reflect on their community and what they could do to improve it. Every single student knows what they are supposed to say. Many 15 year olds (and, frankly, many adults) don’t actually care about improving their community – for any number of reasons. Being forced to write an essay about it is forcing that 15 year old to lie about their feelings about community in order to get the grade.

Neither of those activities are ‘teaching’ citizenship. They don’t help convince that 15 year old the value of participating in their community. They are reinforcing whatever privilege or perspective the child already has… in addition to teaching them to lie for a grade. If we can’t measure it, does that mean we shouldn’t do it? I hope not.